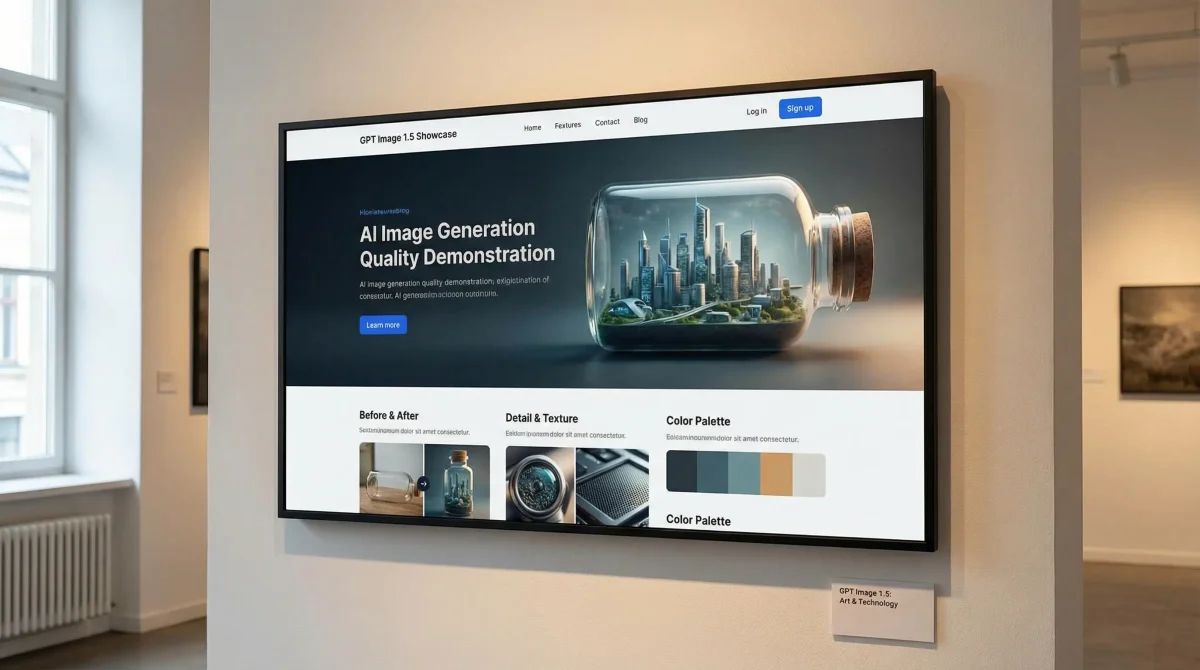

GPT Image 1.5: Complete Guide to OpenAI’s Best Image Generator

OpenAI released GPT Image 1.5 on December 16, 2025. It’s faster than the previous version, better at following instructions, and can actually render readable text in images. That last part is a bigger deal than it sounds.

If you’ve tried AI image generators before, you know the frustration: you ask for a product photo with specific lighting, the model gives you something close but not quite right, and when you try to edit it, everything changes. GPT Image 1.5 fixes some of that. Not all of it, but enough to matter for production work.

This guide covers what’s new, what works, what doesn’t, and when you should use something else instead.

What’s Different About GPT Image 1.5

GPT Image 1.5 (the API calls it gpt-image-1.5) is OpenAI’s second attempt at a production-ready image generator. The first version, GPT Image 1, was fine for experimentation but unreliable for professional work. This one is built for consistency.

Three main improvements: it’s 4x faster (8-12 seconds instead of 30-45), it follows complex prompts more accurately, and text rendering actually works for headlines and labels. OpenAI says the model “adheres to your intent more reliably—down to the small details—changing only what you ask for while keeping elements like lighting, composition, and people’s appearance consistent.”

That’s the claim. In practice, it’s better but not perfect.

Core Features

1. Better Instruction Following

You can now give GPT Image 1.5 a prompt like “product in lower-left third, sunset lighting from right, brand logo in upper-right corner, shallow depth of field” and it will usually get all of those elements right. Earlier models would nail two or three and ignore the rest.

This matters because it turns image generation from a creative lottery into something you can actually plan around. If you need a specific composition for a campaign, you can describe it and get it.

2. Editing That Preserves Details

When you edit an image, GPT Image 1.5 tries to change only what you asked for. If you say “change the jacket to blue,” it should leave the face, lighting, and background alone.

OpenAI calls this “region-aware editing.” It works by identifying which pixels need to change and leaving the rest untouched. The system preserves:

- Brand logos and watermarks

- Lighting direction

- Background elements

- Color grading

- Textures

Wix, the website builder, says they use GPT Image 1.5 to generate “full product image catalogs (variants, scenes, and angles) from a single-source image.” That’s the use case where this feature shines: take one product photo, generate it in 20 different contexts, and the product looks the same in all of them.

3. Text Rendering That Works (Sometimes)

Earlier AI image models couldn’t spell. GPT Image 1.5 can handle short text: headlines, product labels, logo text. It’s good at 3-5 word phrases in common fonts.

It’s still bad at:

- Long paragraphs (anything over 20-30 words gets garbled)

- Handwritten or decorative fonts

- Non-Latin scripts

- Text on curved surfaces

For anything complex, generate the image without text and add it in Figma or Photoshop afterward.

4. Speed

Generation times dropped from 30-45 seconds to 8-12 seconds for standard images. That’s fast enough to iterate. You can test ten variations in two minutes instead of seven.

Benchmarks:

- 1024×1024: 8-12 seconds (was 35-45)

- 1024×1536: 12-18 seconds (was 45-55)

- 1536×1024: 12-18 seconds (was 45-55)

5. Lower Costs

Images cost 20% less than GPT Image 1 through the API. Combined with the speed improvement, you get more images for the same budget.

How It Compares to Competitors

GPT Image 1.5 vs Google Nano Banana Pro

Google’s Nano Banana Pro came out around the same time and apparently spooked OpenAI enough that Sam Altman called it a “code red” internally.

Nano Banana Pro is better at photorealism. If you need images that look like real photographs, especially natural scenes with people, Nano Banana Pro wins. It’s also better at capturing current aesthetic trends.

GPT Image 1.5 is better at precision. If you need exact layouts, text rendering, or iterative edits that preserve specific details, use GPT Image 1.5. It’s more predictable.

Use Nano Banana Pro for: social media content, lifestyle photography, anything where “looking real” matters more than exact specifications.

Use GPT Image 1.5 for: product catalogs, brand assets with logos, infographics, e-commerce, workflows that need 5+ edits while maintaining consistency.

GPT Image 1.5 vs Midjourney

Midjourney is still the favorite for digital artists. It has better artistic interpretation and a strong community with shared prompts and techniques.

GPT Image 1.5 is integrated into ChatGPT, which means no platform switching. It also has API access for automation. If you’re building image generation into a product, GPT Image 1.5 is easier to work with.

Midjourney wins on creativity. GPT Image 1.5 wins on control.

Real-World Use Cases

E-Commerce Product Catalogs

Photograph a product once on a neutral background. Use GPT Image 1.5 to generate variants in different settings. The product looks consistent across all images, logos stay intact.

This is cheaper than photoshoots and faster than manual editing.

Marketing and Brand Assets

Generate base designs with brand colors, iterate while preserving logos, create A/B test variants, produce localized versions for different markets.

The logo preservation is critical here. Earlier AI tools would distort or remove logos during edits.

Social Media Content

Generate a master image, then create platform-specific versions:

- Instagram (1:1): 1024×1024

- Instagram Stories (3:4): 1024×1536

- Twitter/X (4:3): 1536×1024

Same prompt, different size parameters.

Design Concept Visualization

Prototype visual concepts quickly, test multiple directions, gather feedback, refine the winner. Some design teams report cutting concept phase time from days to hours.

Editorial and Publishing

Article headers, infographics, editorial illustrations. Good for conceptual work and data visualizations with readable labels.

Training Materials

Instructional visuals, diagrams, scenario illustrations. Useful for creating diverse representation in educational content.

Prompt Engineering

Good prompts follow this structure:

[Subject] + [Setting] + [Action] + [Style] + [Lighting] + [Composition] + [Quality]

Product Photography

Template: “Professional product photo of [product] on [surface], [lighting], [angle], [mood], [quality]”

Example: “Professional product photo of luxury watch on black marble surface, dramatic side lighting with soft shadows, 45-degree angle, elegant and premium mood, high-end commercial quality”

Portrait Photography

Template: “[Shot type] portrait of [subject], [expression], wearing [clothing], [background], [lighting], [specs]”

Example: “Close-up portrait of middle-aged woman with short gray hair, genuine smile, wearing casual denim jacket, blurred outdoor background, golden hour natural lighting, shallow depth of field”

Infographics

Template: “Clean infographic showing [topic], [layout], [colors], [headline], [quality]”

Example: “Clean infographic showing quarterly sales growth, vertical bar chart layout, blue and white color scheme, bold headline ‘2025 Q4 Results’ at top with percentage labels, professional business design quality”

Text Rendering Tips

- Keep text short (3-5 words per element)

- Use common fonts (“bold sans-serif” works better than “Helvetica Neue”)

- Specify position explicitly (“headline centered at top”)

- Request high contrast (“white text on dark background”)

- Avoid small fonts (under 18pt equivalent)

For complex text, generate the image without it and add text in Figma/Canva/Photoshop afterward.

Refinement Workflow

For high-quality projects:

Step 1: Initial concepts (low quality, broad prompt)

- Generate 3-5 variations

- Pick the best direction

Step 2: Refinement (medium quality, detailed prompt)

- Add specific constraints

- Adjust composition, lighting, elements

- Test 2-3 variants

Step 3: Detail polish (high quality, precise edits)

- Make targeted edits

- Adjust one element at a time

- Preserve everything else

Step 4: Final production (high quality)

- Regenerate with optimized prompt

- Export at full resolution

This takes 15-25 minutes but produces better results than single-shot generation.

Common Mistakes

1. Vague Prompts

Bad: “Create a nice image of a product”

Good: “Professional product photo of luxury watch on black marble surface, dramatic side lighting with soft shadows, 45-degree angle, elegant mood, high-end commercial quality”

2. Expecting Perfect Text

Text rendering is better but not perfect. Keep text short, use common fonts, have a backup plan to add text in traditional tools.

3. Over-Editing

Quality degrades after 6-8 consecutive edits. If you need extensive changes, regenerate from scratch instead of editing repeatedly.

4. Not Saving Prompts

Document successful prompts with parameters and results. You’ll want to reproduce them later.

Limitations

GPT Image 1.5 is better than GPT Image 1, but it’s not magic.

Technical limitations:

- Images with 10+ distinct objects show spatial inconsistencies

- Some outputs still have the “AI look” (over-smoothed, unnaturally perfect)

- Body text longer than 20-30 words contains errors

- Training data skews Western (regional architecture and cultural details may lack authenticity)

- Quality degrades after 6-8 consecutive edits

When to use traditional photography/design instead:

- Technical precision is required (architecture, engineering, medical)

- Legal requirements mandate human-created content

- Brand values emphasize human artistry

- Budget allows for professional services

How to Access GPT Image 1.5

Available to all ChatGPT users (Free, Plus, Team, Enterprise) since December 16, 2025.

ChatGPT Interface

- Log into chat.openai.com

- Click “Images” tab in left sidebar

- Enter prompt (up to 2000 characters)

- Click “Generate”

- Wait 8-18 seconds

The interface has preset styles and filters you can apply without writing prompts.

API Access

For developers and high-volume generation, use the API through OpenAI directly or platforms like EvoLink.AI.

API features:

- Text-to-image generation

- Image-to-image transformation

- Natural language editing

- Quality tier selection (low, medium, high, auto)

- Batch generation

- Custom parameters

Cost Optimization

1. Quality Tiers

Use low or medium for initial iterations, high for final production. This cuts costs by 40-60%.

2. Size Optimization

Generate at the smallest size that meets your needs. Larger images cost more.

3. Prompt Efficiency

Shorter prompts use fewer tokens. Remove unnecessary adjectives, use structured formats, test minimal viable prompts.

4. Caching

Store successful generations with metadata. Build a library of base images for future editing instead of regenerating.

5. Hybrid Workflows

Generate base images with AI, add complex text/logos in traditional tools, use AI for variations of proven designs.

What’s Next

GPT Image 1.5 is good enough for production work in specific use cases. The speed, instruction following, and detail preservation improvements address real problems.

But OpenAI admits “results remain imperfect” and “there is still significant room for improvement.” Text rendering still fails on complex layouts. Photorealism has a ceiling. Cultural specificity is limited.

For now, treat GPT Image 1.5 as one tool in your toolkit. It’s fast and good at specific tasks: product variants, brand assets with logos, infographics, iterative editing. It’s not good at everything.

The key is knowing when to use it and when to reach for something else.